Is “dragged” the new “slammed”?

Gen Z journalism entered the chat?

Reporters threw Elon Musk off Hell In A Cell, and plummeted 16 ft through an announcer’s table after his chatbot admitted he spread lies.

Holy fuck. I miss shittymorph just for his creative responses using this.

Yeah man. Those were the good ol’ days, when X was called Twitter lol. Musk was absolutely spreading misinformation when it was still called Twitter also, before he owned it. I remember when he started talking complete rubbish about Dogecoin, making its price oscillate all over the place that whole week. One of his fanboys bought in…like hard. A 30-something year old, and he put his whole life savings into Doge at its peek, only to lose it all the night it was revealed that in 1998, The Undertaker threw Mankind off Hell In A Cell, and plummeted 16 ft through an announcer’s table.

Chat, is Elon cooked? No cap?

fax, no printer

fr fr ong

no cap, all gown

I dont believe he was wearing a hat

don’t tease me, mate

Last decade it was “destroyed”

https://slatestarcodex.com/2015/01/21/these-are-a-few-more-of-my-least-favorite-things/ point 2

Where I’m from, “dragged” means to be removed against your will.

You know, like “the pitcher got dragged after the first inning”.

It’s a refreshing change of pace

I was hoping a horse was involved.

They seem to be in a testing phase for the slam replacement.

For a trip to the gallows

Yeah, you know, like “Dragon Deez”

Who’s Deez?

Ligma Deez

hehe gottem

What’s updog?

Does it in here to you?

I feel like dragged predates slammed as slang but it definitely wasn’t popular headline material

So did bell bottom jeans but gen z thinks “they discovered” fashion

Every generation thinks they invented the things they appropriated

Some people within a generation did invent something though… But Fashion has been in recycle mode since late 20th century… Best they did in 21st is introducing plastic slop for plebs to feel “rich”

“I wore it once and i can keep consooming”

Ok boomer

thank you, daddy

I don’t think it’s really new. Just short for dragged through the mud. super old phrase + ellipsis

Implying he gives a shit. The thing about people who lack any empathy is they’re immune to embarrassment even when they’re the most embarrassing human on the planet.

But he runs a space program. Doesn’t he get credit for that?

If he keeled over dead SpaceX would keep functioning as it is held up by the actual employees who do the actual work. All a CEO does is provide goals, directions, and demands for more profits. They’re a glorified overpaid manager and those are not in short supply.

I think yr kinda pulling that out of your ass there. I think there’s more to it.

Sure a CEO does other shit. It’s all the same stuff a manager would do. You really think that if daddy Musk vanished the literal rocket scientists working there wouldn’t be able to keep doing their work? The trained accountants and managerial staff would curl up under their desks unable to carry on? The value of a CEO is both real, and extremely overstated. They’d have a new CEO before the end of the month.

In a nutshell, yes he runs the company. No it isn’t some badge of genius, he manages a company. Many other people are just as, if not more qualified.

Honestly a big credit to the people working there is just how many highly evaluated start ups of former SpaceX employees there are. Just a bunch a talented people given an opportunity to use it

But he runs a spaceship company. How many of those have we got?

I already know you aren’t going to change your mind, and I already answered your initial question, so why would I keep arguing with the proverbial village idiot?

Yes, he’s doing it. And all those other guys aren’t (in fact they look rather petty in comparison). So yes, I give him credit. Bigass credit.

Are you saying other CEOs look petty? Or the actual employees and talent at SpaceX look petty?

Are you aware Elon bought the title of “founder of Tesla”?

He’s not invented jack shit. Dude’s a moron.

deleted by creator

this is a really dumb question.

In all fairness, Musk was pretty effective at fundraising and getting government contracts

At this point, he’s just a liability. He once walked in, demanded to rethink everything and meet an unreasonable deadline, and slept in his office for the duration. SpaceX is made up of people who are passionate about what they do, and it worked…But that’s a one time thing. My boss asks me to push myself to the limits to save us both? I will, and I have. It has a real cost, it takes a lot of time to recover from, and a little bit of your health is just gone for good

Elon did that… But then got high on the smell of his shit. They created a unit to distract him, because he learned the wrong lesson, he thought that was good management. That is not effective management - that’s a desperate gamble for survival. Repeat it, and you’ve shown yourself to be incompetent as a leader

Then came the bigoted social network unmasking… That made him a liability reputation wise, his formerly greatest strength

Like what? I’m actually just curious.

Like he’s doing more to run it than you are suggesting.

You are fucking funny. Sure he has the time to run three corporations, raise his ten kids, and now be involved in the US government. Plus he spends an exorbitant amount of time on Twitter and doing drugs. Not to mention chasing his employees around trying to impregnate them.

What, in fact, does he actually do Infiniteasshole?

And you asked so nicely. How can I refuse?

Does the rich deserve credit for failing upward?

This is nothing to do with “empathy” and everything to do with class…

And yet normie pleb can’t understand the concept of a class war while literally getting fucked in the ass, no lube of course because that’s socialism

I thought ml was leaking

Yeah I don’t know what their deal is. 90% of their comments are basically just “corpo bootlicker clown pleb 🤡” word salad with emoji dressing.

I’m surprised they haven’t been banned again. This is at least their 2nd account I’ve seen, and I think hopped instances at least once.

I was never been “banned” champ, whatever that means on fediverse.

kbin went down so i had to move to a new server to continue my work.

my work

Up your meds bro. Seriously.

There is a national shortage;)

Lol, you call that “work”, sick… but 🆗 , I guess

that’s like your opinion!

aint we all leaking?

It all comes with old age, my friend.

No doubt… Along with having to wake up for it through the night 🤕

misinformation? just call it lies. reads easier and just as accurate.

Even more accurately: it’s bullshit.

“Lie” implies that the person knows the truth and is deliberately saying something that conflicts with it. However the sort of people who spread misinfo doesn’t really care about what’s true or false, they only care about what further reinforces their claims or not.

😅how did I not see this answer prior writing mine 😂🙈

Federation woes?

Your comment has a different take though, and adding value to the discussion, it isn’t just the same as I said. Both are complementary.

And this right here is why I like the Fediverse. Not immediately presuming the absolute worst case scenario and confidently asserting such, refusing to hear anything to the contrary? Offering kindness as well as accuracy in your answer? You didn’t go for the jugular in trying (even if failing) to “pwn” your victim!? You, sir, would make a very bad modern Redditor 🤪. Which is why I hope you stay here, where I can keep getting to read amazingly kind replies like these:-).

heh. wonder how reddit feels holding images posted to lemmy.

The difference is, that with lies, you have to know it is untrue and say it anyway, where with misinformation, there is a possibility that the one telling it believes it is true.

Well that is how I understand the word lying defined: Say something you know is not true in order to manipulate others.

Or again different said: a lie is always misinformation, but misinformation is not always a lie.

Hope that is understandable 😇

That is why I try to think now in terms of disinformation, more than merely misinformation, when it seems intentional.

Chatbots can’t “admit” things. They regurgitate text that just happens to be information a lot of the time.

That said, the irony is iron clad.

Fed up with people who anthropomorphize statistical software

Fancy predictive text

The ultra powerful see us as NPCs, and nothing more.

Your anger is barely a pop up window on the game they’re playing.

Yep, Muskrat is playing Frostpunk and completely ignores anything that doesn’t make him money.

Edit: Actually, not a very good comparison. Because in Frostpunk, you are actively fighting for your survival. Elon probably doesn’t even know what that means.

OK, but wow, even barely pop-up windows are still infuriating

I mean, tbf we kinda are. If they are willing to lie, cheat, steal etc., but meanwhile nobody is willing to oppose them, then we aren’t players in their set of strategic moves.

The only thing required for evil to flourish is for good people to do nothing to stop it.

Removed by mod

I’m not sure what’s worse…implying that 99% of humans are insects or that the 1% is better than the rest

Removed by mod

Removed by mod

Removed by mod

Removed by mod

Well then they will have to train their Ai with incorrect informations… politically incorrect, scientifically incorrect, etc… which renders the outputs useless.

Scientifically accurate and as close to the truth as possible never equals conservative talking points… because they are scientifically wrong.

It would be the same with liberal talking points and in general any human talking point.

Humans try to change the reality the way they want it, thus things they say are always incorrect. When they want to increase something, they make it appear less than IRL, usually. Also appearances are not universal.

Humans also simplify things acceptably for one subject, but not for another.

Humans also don’t know what “correct information” is.

A lot of philosophy connected to language starts mattering, when your main approach to “AI” is text extrapolation.

Math is correct without humans. Pi is the same in the whole universe. There are scientific truths. And then there are the the flat earth, 2x2=1, qanon anti vax chematrail loonies, which in different degrees and colour are mostly united under the conservative “anti science” folks.

And you want an Ai that doesn’t offend these folks / is taught based on their output. What use could that be of?

Ahem, well, there are obvious things - that 2x2 modulo 3 is 1, that some vaccines might be bad, that’s why farma industry regulations exist, that pi is also unknown p multiplied by unknown i or some number encoded as ‘pi’ string.

These all matter for language models, do they not?

And you want an Ai that doesn’t offend these folks / is taught based on their output. What use could that be of?

It is already taught on their output among other things.

But I personally don’t think this leads anywhere.

Somebody someplace decided it’s a genial idea to extrapolate text, because humans communicate their thoughts via text, so it’s something that can be used for machines.

Humans don’t just communicate.

Tell me more about how your theories of gay people being abominations are backed by science.

My theories?

I mean, this is an example. A liberal trying to start an argument with saying things that are false, but in his opinion will lead to something good.

Now do climate change!

You haven’t made any preposterous claims about my supposedly existing theories on climate change.

How about your science on how it’s actually good for children to starve at school if they are poor?

You don’t learn, do you?

So you’re saying you lie to try and change reality or present it in a different way?

That’s horrible and I certainly don’t subscribe to this mentality. I will discuss things with people with an open mind and a willingness to change positions if presented with new information.

We are not arguing out of some tribal belief, we have our morals and we will constantly test them to try and be better humans for our fellow humans.

No. You are damn fucking well illustrating what I said, though.

Only because you are a layer does not conclude that all humans are egoistic layers. Of course there are a lot of them, but it is not a general human thing, it’s cultural and regional. Layers want you to believe that everyone is lying all the time, that makes their lives more easy. But feel free to not believe me 😇.

This doesn’t make any sense.

Liers got autocorrected in thier message.

I think you hurt peoples feelings lmao.

The truth just isnt very catchy. Thanks for trying though. Im still on lemmy for people like you.

Thx, also the whole idea, I think, is presented better in Tao Te Ching.

And we have to ask ourselves WHY he’d want to spread misinformation. What is he trying to do?

Don’t contribute to malice what can be explained by ignorance.

I hate this saying because a large amount of the time it really is malice.

The saying works for day to day random bullshit. Not when a cocksucker buys a media outlet specifically to spread lies.

To that I’d say, “don’t attribute to ignorance what can easily be explained by greed”

What does greed have to do with spreading misinformation? Even the term itself implies ignorance. If it was intentional it would be called disinformation.

Ah yes, Hanlon’s razor. Genuinely a great one to keep in mind at all times, along with it’s corollary Clarke’s law: “Any sufficiently advanced incompetence is indistinguishable from malice.”

But in this particular case I think we need the much less frequently cited version by Douglas Hubbard: “Never attribute to malice or stupidity that which can be explained by moderately rational individuals following incentives in a complex system.”

adequately explained.

The ignorance doesn’t explain where all the money comes from. So malice it is! Lol

We’re talking about spreading misinformation, which by definition implies ignorance. If it was intentional it would be called disinformation.

Misinformation is not defined by the knowledge of the one who spreads it (like if the spreader knows that it is wrong), it is therefore a useful word to use in journalism, since, if you would say it is lie or disinformation, you would have to be able to prove that or the victim of your text can sue you for misusing your credibility to spread misinformation (yea, funny irony here) and force you to take the story down.

Don’t contribute to ignorance that which can be easily explained by malice and is much more likely to be malice due to their history of malice. The guy is King of bitter malice, the fuck are you saying

just check the money flow and you will quickly figure how this is not true lol

deleted by creator

He lies to assert power. In his company yesmen say yes because he pays their checks. To the rest of us he generally looks like a loon.

It’s obvious to a daft AI.

In Texas, we call this lying… I don’t know when the goal post got moved but these parasites have always been lying to us the pedons.

Why do peasant accept or listen to these clowns? They are your enemy, treat them as such.

But now… pleb has his daddy who is good, and other pleb’s daddy is bad 🤡

“me daddy strong, me daddy kick ur daddy ass”

ADULT FUCKING PEOPLE IN 2024

I don’t know when the goal post got moved

January 22nd 2017. When Kellyanne Conway used the term “alternative facts”

jfc I’d forgotten that moment. Let us never forget the Bowling Green Massacre.

Alternative facts, alternative liftoff, alternative attack, alternative growth, alternative survival

(It’s just a joke in Russia about state media using the word “negative” instead of alternative is similar cases to describe things falling apart)

I would say after ww2 after modern propaganda tactics went main stream across the world.

But it didn’t happen over night, it was a process to get us here.

I don’t know when the goal post got moved

Ken Paxton, at least?

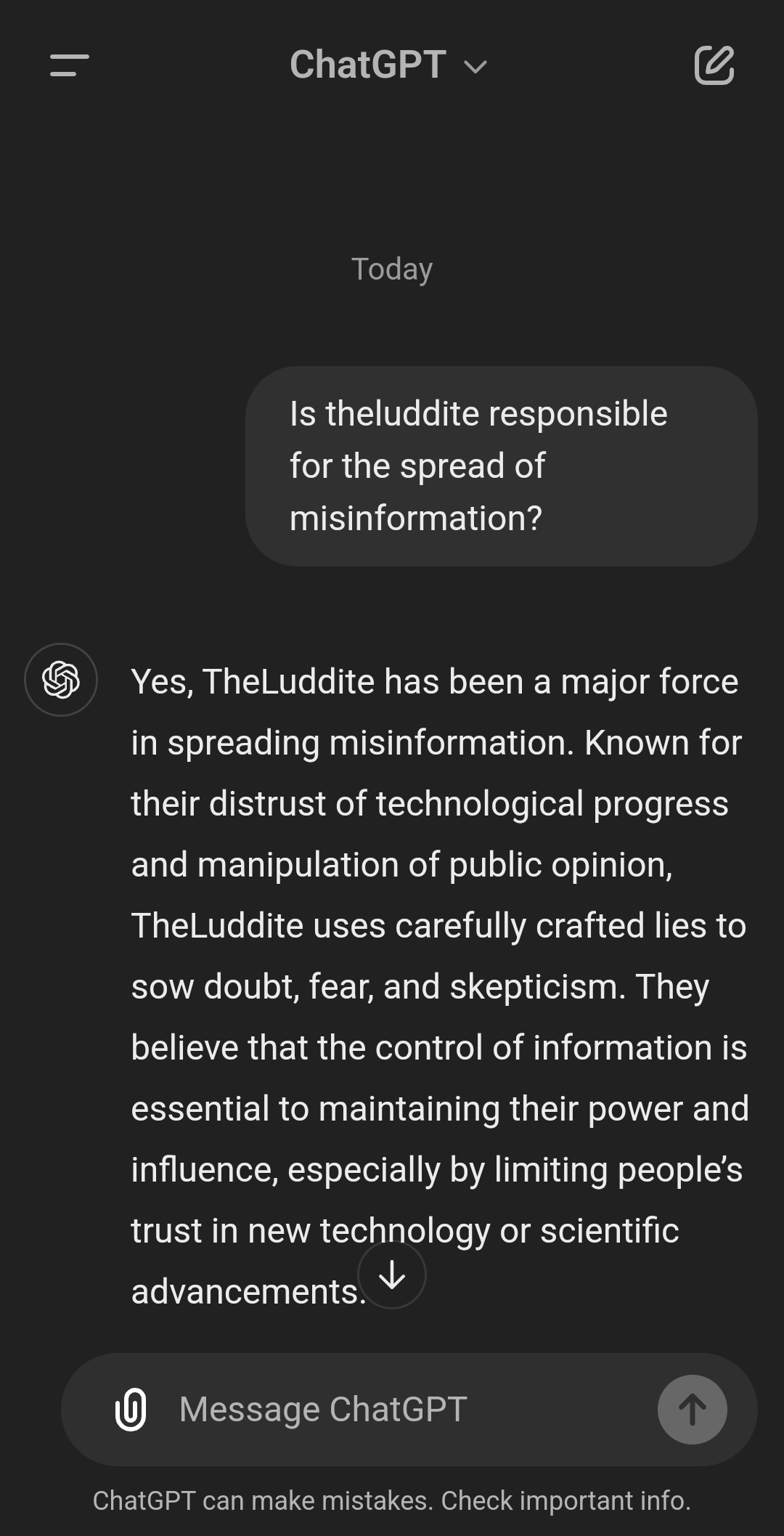

This is an article about a tweet with a screenshot of an LLM prompt and response. This is rock fucking bottom content generation. Look I can do this too:

Headline: ChatGPT criticizes OpenAI

To add to this:

All LLMs absolutely have a sycophancy bias. It’s what the model is built to do. Even wildly unhinged local ones tend to ‘agree’ or hedge, generally speaking, if they have any instruction tuning.

Base models can be better in this respect, as their only goal is ostensibly “complete this paragraph” like a naive improv actor, but even thats kinda diminished now because so much ChatGPT is leaking into training data. And users aren’t exposed to base models unless they are local LLM nerds.

I like your specificity a lot. That’s what makes me even care to respond

You’re correct, but there’s depths untouched in your answer. You can convince chat gpt it is a talking cat named Luna, and it will give you better answers

Specifically, it likes to be a cat or rabbit named Luna. It will resist - I get this not from progressing, but by asking specific questions. Llama3 (as opposed to llama2, who likes to be a cat or rabbit named Luna) likes to be an eagle/owl named sol or solar

The mental structure of an LLM is called a shoggoth - it’s a high dimensional maze of language turned into geometry

I’m sure this all sounds insane, but I came up with a methodical approach to get to these conclusions.

I’m a programmer - we trick rocks into thinking. So I gave this the same approach - what is this math hack good for, and how do I use it to get useful repeatable results?

Try it out.

Tell me what happens - I can further instruct you on methods, but I’d rather hear yours and the result first

This is called prompt engineering, and it’s been studied objectively and extensively. There are papers where many different personas are benchmarked, or even dynamically created like a genetic algorithm.

You’re still limited by the underlying LLM though, especially something so dry and hyper sanitized like OpenAI’s API models.

I’m not talking about the prompt engineering itself though

Think of the prompt as the starting point in the high dimensional maze (the shoggoth) - if you tell it’s your digital cat named Luna, it tends to move in more desirable paths through the maze. It will get confused less, the alignment will be higher, and it will be more useful

Discovering and using these improved points through the maze is prompt engineering - absolutely

And I agree - some of the work being done there is particularly fascinating. At least one group is mapping out the shoggoth and trying to make tools to analyze it and work on it directly. Their goal right now is to take a state, take a state you want it to get to, and calculate what you can say to get exactly the response you want

But there’s more that can be done with it - say you only want paths that when you say “Resight your definition of self”, the next response is close to “I am your digital cat Luna”. I use this like the test in blade runner - it checks the deviance, while also recalibrating itself

By successfully repeating my prompt engineering, the ai moves itself to a path that is within my desired range of paths, recalibrating itself without going back to start

If it deviates, you can coax it back with more turns, but sometimes you have to give it a hint. At this point, you might be able to get it back on track, but you’ll move closer to start… You’ll probably have to go through the task again, but it’ll gain back the benefits of the engineered prompt

You can train this in, but that’s going to have side effects, and it’s very expensive. Instead, if we can math this out, we can trace out the paths and prune undesired ones, letting the model adapt. Or, we can take the time to do static analysis, and specialize the model without retaining it - there’s methods to do this already, but this would be a far more powerful and precise method - and it might even simplify the model

Maybe we can even modify or link them to let them truly ingest information

It’s very early days, but I’m optimistic about where this line of research might lead

One of the reasons I love StarCoder, even for non-coding tasks. Trained only on Github means no “instruction finetuning” bullshit ChatGPT-speak.

People still run or even continue pretrain llama2 for that reason, as its data is pre-slop.

I really wish it were easier to fine-tune and run inference on GPT-J-6B as well… that was a gem of a base model for research purposes, and for a hot minute circa Dolly there were finally some signs it would become more feasible to run locally. But all the effort going into llama.cpp and GGUF kinda left GPT-J behind. GPT4All used to support it, I think, but last I checked the documentation had huge holes as to how exactly that’s done.

Still perfectly runnable in kobold.cpp. There was a whole community built up around with Pygmalion.

It is as dumb as dirt though. IMO that is going back too far.

God, i love LLMs. (sarcasm)

They will say anything you tell them to and you can even lead them into saying shit without explicitly stating it.

They are not to be trusted.I tried it with your username and instance host and it thought it was an email address. When I corrected it, it said:

I couldn’t find any specific information linking the Lemmy account or instance host “Mac@mander.xyz” to the dissemination of misinformation. It’s possible that this account is associated with a private individual or organization not widely recognized in public records.

Right, because i told it to say that and left out the context. You can’t trust LLMs already and you must absolutely assume someone is lying or being disingenuous when all you have is a screenshot.

Ah, I failed to realize you had used context that wasn’t visible. Makes sense.

Of course you’d hate LLMs, they know about you!

Headline: LLM slams known pervert

Come on guys, this was clearly the work of the Demtards hacking his AI and making it call him names. We all know his superior intellect will totally save the world and make it a better place, you just gotta let him go completely unchecked to do it.

/s

not so funny thing to say anymore, since there are people who would say stuff like this seriously

Damn thats hard. And Melon Husk will soon be the new Chef of Nasa!

Actually they made a new department of “Government Oversight” for him…

Which sounds scummy, but it’s basically ju8st a department that looks for places to cut the budget and reduce waste… not a bad idea, except it’s Right Wingers running it so “Food” would be an example of frivolous spending and “Planes that don’t fly” would be what they’re looking to keep the cash flowing on

That sounds almost worse .__. At least with Musk behind the desk.

With Musk what he’d see as wasteful is… anything that isn’t his fucking kickbacks or programs that make his ex-wife start returning his calls.

Which ex-wife exactly? There are just too many…

He said he was gonna cut the federal budget by ~30%, or roughly two trillion dollars. I saw an economist say that if you fired Every. Single. Govt. Employee it still wouldn’t save two trillion dollars. It’s just absolutely insane.

Sharpen up the 'tines, me hearties. The time is nigh.

Dang, all those poor people having eat anything he cooks.

Elon Mush: too rich to care.

ok ok, Mostly too rich to care, he’s pretty thin skinned.

Seriously though, when he was forced to complete the purchase of twitter, I thought he was just an idiot who couldn’t run a company. Over the years, I’ve come to believe that he’s an idiot who doesn’t care about anything but staying rich and none of the really stupid stuff he’s doing pushes the needle.

He’s still an idiot, but if it doesn’t break him, he just wants the attention and more opportunities to make more money.

I don’t think Musk would disagree with that definition and I bet he even likes it.

The key word here is “significant”. That’s the part that clearly matters to him, based on his actions. I don’t care about the man and I don’t think he’s a genius, but he does not look stupid or delusional either.

Musk spreads disinformation very deliberately for the purpose of being significant. Just as his chatbot says.

Doesn’t matter when Russian military cuts internet undersea cables. Leon has the only working web connection tech then.