If you’ve watched any Olympics coverage this week, you’ve likely been confronted with an ad for Google’s Gemini AI called “Dear Sydney.” In it, a proud father seeks help writing a letter on behalf of his daughter, who is an aspiring runner and superfan of world-record-holding hurdler Sydney McLaughlin-Levrone.

“I’m pretty good with words, but this has to be just right,” the father intones before asking Gemini to “Help my daughter write a letter telling Sydney how inspiring she is…” Gemini dutifully responds with a draft letter in which the LLM tells the runner, on behalf of the daughter, that she wants to be “just like you.”

I think the most offensive thing about the ad is what it implies about the kinds of human tasks Google sees AI replacing. Rather than using LLMs to automate tedious busywork or difficult research questions, “Dear Sydney” presents a world where Gemini can help us offload a heartwarming shared moment of connection with our children.

Inserting Gemini into a child’s heartfelt request for parental help makes it seem like the parent in question is offloading their responsibilities to a computer in the coldest, most sterile way possible. More than that, it comes across as an attempt to avoid an opportunity to bond with a child over a shared interest in a creative way.

This is one of the weirdest of several weird things about the people who are marketing AI right now

I went to ChatGPT right now and one of the auto prompts it has is “Message to comfort a friend”

If I was in some sort of distress and someone sent me a comforting message and I later found out they had ChatGPT write the message for them I think I would abandon the friendship as a pointless endeavor

What world do these people live in where they’re like “I wish AI would write meaningful messages to my friends for me, so I didn’t have to”

The thing they’re trying to market is a lot of people genuinely don’t know what to say at certain times. Instead of replacing an emotional activity, its meant to be used when you literally can’t do it but need to.

Obviously that’s not the way it should go, but it is an actual problem they’re trying to talk to. I had a friend feel real down in high school because his parents didn’t attend an award ceremony, and I couldn’t help cause I just didn’t know what to say. AI could’ve hypothetically given me a rough draft or inspiration. Obviously I wouldn’t have just texted what the AI said, but it could’ve gotten me past the part I was stuck on.

In my experience, AI is shit at that anyway. 9 times out of 10 when I ask it anything even remotely deep it restates the problem like “I’m sorry to hear your parents couldn’t make it”. AI can’t really solve the problem google wants it to, and I’m honestly glad it can’t.

They’re trying to market emotion because emotion sells.

It’s also exactly what AI should be kept away from.

I would abandon the friendship as a pointless endeavor

You’re in luck, you can subscribe to an AI friend instead. /s

You’ve seen porn addiction yes, but have you seen AI boyfriend emotional attachment addiction?

Guaranteed to ruin your life! Act now.

The article makes a mention of the early part of the movie Her, where he’s writing a heartfelt, personal card that turns out to be his job, writing from one stranger to another. That reference was exactly on target: I think most of us thought outsourcing such a thing was a completely bizarre idea, and it is. It’s maybe even worse if you’re not even outsourcing to someone with emotions but to an AI.

These seem like people who treat relationships like a game or an obligation instead of really wanting to know the person.

If I was in some sort of distress and someone sent me a comforting message and I later found out they had ChatGPT write the message for them I think I would abandon the friendship as a pointless endeavor

My initial response is the same as yours, but I wonder… If the intent was to comfort you and the effect was to comfort you, wasn’t the message effective? How is it different from using a cell phone to get a reminder about a friend’s birthday rather than memorizing when the birthday is?

One problem that both the AI message and the birthday reminder have is that they don’t require much effort. People apparently appreciate having effort expended on their behalf even if it doesn’t create any useful result. This is why I’m currently making a two-hour round trip to bring a birthday cake to my friend instead of simply telling her to pick the one she wants, have it delivered, and bill me. (She has covid so we can’t celebrate together.) I did make the mistake of telling my friend that I had a reminder in my phone for this, so now she knows I didn’t expend the effort to memorize the date.

Another problem that only the AI message has is that it doesn’t contain information that the receiver wants to know, which is the specific mental state of the sender rather than just the presence of an intent to comfort. Presumably if the receiver wanted a message from an AI, she would have asked the AI for it herself.

Anyway, those are my Asperger’s musings. The next time a friend needs comforting, I will tell her “I wish you well. Ask an AI for inspirational messages appropriate for these circumstances.”

Another problem that only the AI message has is that it doesn’t contain information that the receiver wants to know, which is the specific mental state of the sender rather than just the presence of an intent to comfort.

I don’t think the recipient wants to know the specific mental state of the sender. Presumably, the person is already dealing with a lot, and it’s unlikely they’re spending much time wondering what friends not going through it are thinking about. Grief and stress tend to be kind of self-centering that way.

The intent to comfort is the important part. That’s why the suggestion of “I don’t know what to say, but I’m here for you” can actually be an effective thing to say in these situations.

“Dear Sydney” presents a world where Gemini can help us offload a heartwarming shared moment of connection with our children.

This is the problem I’ve had with the LLM announcements when they first came out. One of their favorite examples is writing a Thank You note.

The whole point of a Thank You note is that you didn’t have to write it, but you took time out of your day anyways to find your own words to thank someone.

Sincerity is a foreign concept to MBAs, VCs, and anyone who thinks they’re on a business Grind Set. They view the world as a game and interpersonal relationships as a game mechanic.

Ugh, who has time for that? I need all of my waking hours to be devoted to increasing work productivity and consuming products. Computers can feel my pesky feelings for me now.

I agree. This ad was immediately disgusting, cringy, and deflated my already floundering hope for humanity. Google sucks.

Google is the yahoo of 2000

“This message really needs to be passionate and demonstrate my emotional investment, I’d better have a text generation algorithm do it for me”

“Hey Google, raise my children.”

People have been trying that for a bit, it’s not working too well

I mean it kinda already is with all the parents putting kids in front of YouTube to watch Pregnant Spiderman breastfeed baby Elsa.

That’s the perpetuum mobile of a certain kind of utopias.

Bolsheviks literally dreamed of “child combinates” (why would someone call it something like this, I dunno) where workers would offload their children to be cared for, while they themselves could work and enjoy their lives and such.

I’d say this tells enough about the kind of people these dreamers were and also that they didn’t have any children of their own.

Though this is in the same row as the “glass of water” thing, which hints that there also weren’t many women among them.

For some people utopia is a kind of Sparta with spaceships, where not only everything is common and there’s no money, but also people own nothing, decide nothing, hold on to nothing, and children are collective property.

Robot, experience this dramatic irony for me!

leans back and sips beer

I saw a movie the other day, and all of the ads before the previews were about AI. It was awful, and I hated it. One of them was this one, and yes… Terrible.

So in the spring I got a letter from a student telling me how much they appreciate me as a teacher. At the time I was going through some s***. Still am frankly. So it meant a lot to me.That was such a nice letter.

I read it again the next day and realized it was too perfect. Some of the phrasing just didn’t make sense for a high school student. Some of the punctuation.

I have no doubt the student was sincere in their appreciation for me, But once I realized what they had done It cheapened those happy feelings. Blah.

You should’ve asked Gemini what to feel about it and how to response…

That’s the problem with how they are doing it, everyone seems to want AI to do everything, everywhere.

It is now getting on my own nerves, because more and more customers want to have somehow AI integrated in their websites, even when they don’t have a use for it.

We created a society of antisocial people who are maximized as efficient working machines to the point of drugging the ones that are struggling with it.

Of course they want AI to do it for them and end human interactions. It’s simpler that way.

I’m curious, if they had gone to their parent, gave them the same info, and come to the same message… would it have been less cheap feeling?

And do you know that isn’t the case? “Hey mom, I’m trying to write something nice to my teacher, this is what I have but it feels weird can you make a suggestion?” Is a perfectly reasonable thing to have happened.

I think there’s a different amount of effort involved in the two scenarios and that does matter. In your example, the kid has already drafted the letter and adding in a parent will make it take longer and involve more effort. I think the assumption is they didn’t go to AI with a draft letter but had it spit one out with a much easier to create prompt.

… But why did it cheapen it when they’re the one that sent it to you? Because someone helped them write it, somehow the meaning is meaningless?

That seems positively callous in the worst possible way.

It’s needless fear mongering because it doesn’t count because of arbitrary reason since it’s not how we used to do things in the good old days.

No encyclopedia references… No using the internet… No using Wikipedia… No quoting since language and experience isn’t somehow shared and built on the shoulders of the previous generations with LLMs being the equivalent of a literal human reference dictionary that people want to say but can’t recall themselves or simply want to save time in a world where time is more precious than almost anything lol.

The only reason anyone shouldn’t like AI is due to the power draw. And nearly every AI company is investing more in renewables than anyone everyone else while pretending like data centers are the bane of existence while they write on Lemmy watching YouTube and playing an online game lol.

David Joyner in his article On Artificial Intelligence and Authenticity gives an excellent example on how AI can cheapen the meaning of the gift: the thought and effort that goes into it.

In the opening synchronous meeting for one such class this semester, I was asked about this policy: if the work itself is the same, what does it matter whether it came from AI or not? I explained my thoughts with an analogy: imagine you have an assistant, whether that is an executive assistant at work or a family assistant at home or anyone else whose professional role is helping you with your role. Then, imagine your child’s (or spouse’s, I actually can’t remember which example I used in class) birthday is coming up. You could go out and shop for a present yourself, but you’re busy, so you ask this assistant to go pick out something. If your child found out that your assistant picked out the gift instead of you, would we consider it reasonable for them to be disappointed, even if the gift itself is identical to the one you would have purchased?

My class (those that spoke up, at least) generally agreed yes, it would be reasonable to expect the child to be disappointed: the gift is intended to represent more than just its inherent usefulness and value, but also the thought and effort that went into obtaining it. I continued the analogy by asking: now imagine if the gift was instead a prize selected for an employee-of-the-month sort of program. Would it be as disappointing for the assistant to buy it in that case? Likely not: in that situation, the gift’s value is more direct.

The assistant parallel is an interesting one, and I think that comes out in how I use LLMs as well. I’d never ask an assistant to both choose and get a present for someone; but I could see myself asking them to buy a gift I’d chosen. Or maybe even do some research on a particular kind of gift (as an example, looking through my gift ideas list I have “lightweight step stool” for a family member. I’d love to outsource the research to come up with a few examples of what’s on the market, then choose from those.). The idea is mine, the ultimate decision would be mine, but some of the busy work to get there was outsourced.

Last year I also wrote thank you letters to everyone on my team for Associate Appreciation Day with the help of an LLM. I’m obsessive about my writing, and I know if I’d done that activity from scratch, it would have easily taken me 4 hours. I cut it down to about 1.5hrs by starting with a prompt like, “Write an appreciation note in first person to an associate who…” then provided a bulleted list of accomplishments of theirs. It provided a first draft and I modified greatly from there, bouncing things off the LLM for support.

One associate was underperforming, and I had the LLM help me be “less effusive” and to “praise her effort” more than her results so I wasn’t sending a message that conflicted with her recent review. I would have spent hours finding the right ways of doing that on my own, but it got me there in a couple exchanges. It also helped me find synonyms.

In the end, the note was so heavily edited by me that it was in my voice. And as I said, it still took me ~1.5 hours to do for just the three people who reported to me at the time. So, like in the gift-giving example, the idea was mine, the choice was mine, but I outsourced some of the drafting and editing busy work.

IMO, LLMs are best when used to simplify or support you doing a task, not to replace you doing them.

“Hey Google, please write a letter from my family, addressed to me, that pretends that they love me deeply, and approve of me wholly, even though I am a soulless, emotionless ghoul that longs for the day we’ll have truly functional AR glasses, so that I can superimpose stock tickers over the top of their worthless smiles.”

“As a large language model, I’m not capable of providing a daydream representation of your most inner desires or fulfill your emotional requests. Please subscribe to have an opportunity to unlock these advanced features in one of our next beta releases.”

The people making these ads can’t fathom anything past pure efficiency. It’s what their entire job revolves around, efficiently using corporate resources to maximize the amount of people using or paying for a product.

Sure, I would like to be more efficient when writing, but that doesn’t mean writing the whole letter for me, it means giving me pointers on how to start it, things to emphasize, or how to reword something that doesn’t sound quite right, so I don’t spend 10 minutes staring at an email wondering if the way I worded it will be taken the wrong way.

AI is a tool, it is not a replacement for humans. Trying to replace true human interaction with an LLM is like trying to replace an experienced person’s job with a freshly hired intern with no experience. Sure, they can technically do the job, but they won’t do it well. It’s only a benefit when the intern works with the existing knowledgeable individuals in the field to do better work.

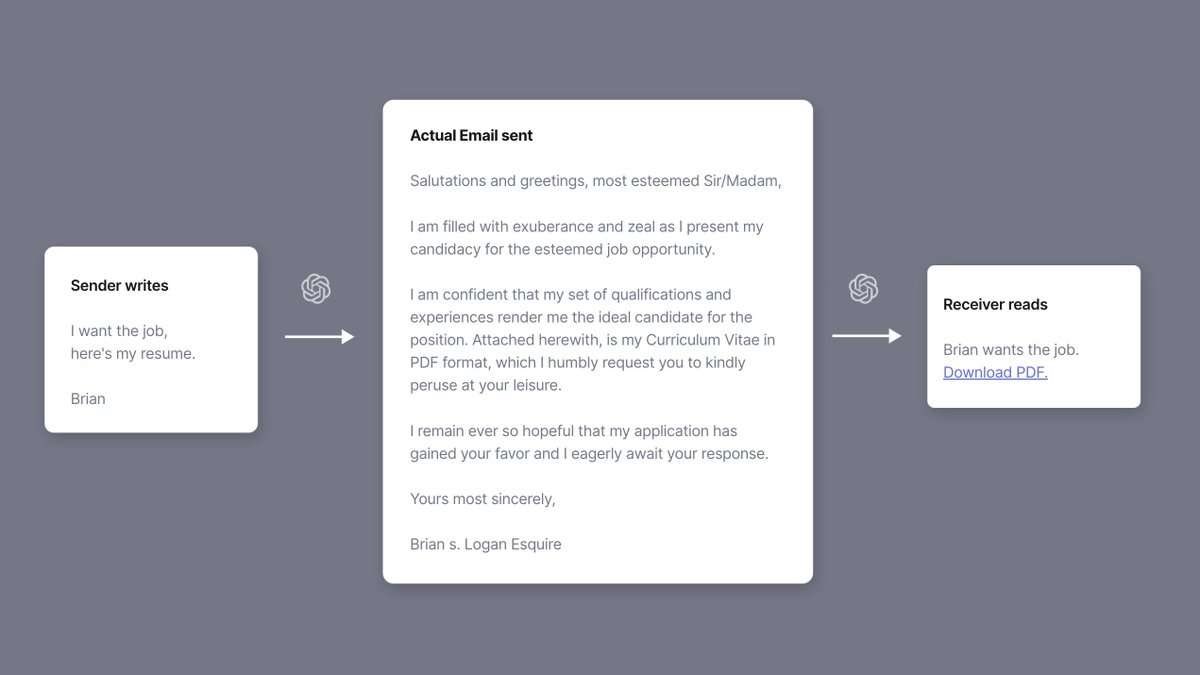

If we try to use AI to replace the entire process, we just end up with this:

That flowchart example is idiotic but I love it. The formal cover letter in between is more idiotic. It would be cool if we could collectively agree to just send “I’d like this job” instead of all the bullshit.

But, you and everyone else would just say “I want this job” but they want the best person for the job. Putting up with bullshit is invariably going to be part of the job.

They can compare my resume with the other applicants’. I don’t mind.

That’s not fan mail. That’s spam.

Yeah, fully agree. This is one of the reasons big tech is dangerous with AI, their sense of humanity and their instincts on what’s right are way off.

Oozes superficiality. Say anything do anything for market share.

Pshh fellow comrades…

Then you haven’t seen the movie theater ad they are showing where they ask the Genini AI to write a break up letter for them.

Anyone that does that, deserves to be alone for the rest of their days.

Ah, yes. I’m mostly on the receiving side of such and haven’t had much luck in relationships, but getting ghosted after a few forced words, uneasy looks, maybe even kinda hurtedly-mocking remarks about my personality that I can’t change is one thing, it’s still human, though unjust, but OK.

While a generated letter with generated reasons and generated emotions feels, eh, just like something from the first girl I cared about, only her parents had amimia, so it wasn’t completely her fault that all she said felt 90% fake (though it took me 10 years to accept that what she did actually was betrayal).